My colleague David Crotty has a rant at Bench Marks wherein he suggests that Nature’s blogging advocacy is just a shallow attempt to get more content for Nature Blogs, and that scientists blogging is just a fad that can’t replace mainstream media coverage of science and won’t amount to much otherwise. He’s certainly entitled to his opinion, but I think there’s another way to see things and I’d like to present a counterpoint to his Nicholas Carr-ying on.

Continue reading

I’ve joined Mendeley as Community Liaison.

Reference managers and I have a long history. All the way back in 20041, when I was writing my first paper, my workflow went something like this:

Reference managers and I have a long history. All the way back in 20041, when I was writing my first paper, my workflow went something like this:

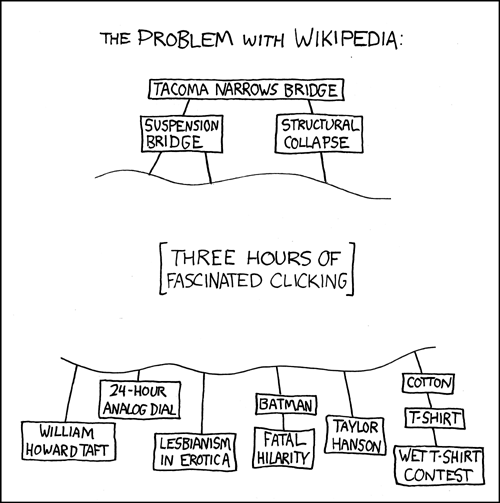

“I need to cite Drs. A, B, and C here. Now, where did I put that paper from Dr. A?” I’d search through various folders of PDFs, organized according to a series of evolving categorization schemes and rifle through ambiguously labeled folders in my desk drawers, pulling out things I knew I’d need handy later. If I found the exact paper I was looking for, I’d then open Reference Manager (v6, I think) and enter the citation details, each in their respective fields. Finding the article, I’d the select it and add it to the group of papers I was accumulating. If it didn’t find it, I’d then go to Pubmed and search for the paper, again entering each citation detail in its field, and then do the required clicking to get the .ris file, download that, then import that into Reference Manager. Then I’d move the reference from the “imported files” library to my library, clicking away the 4 or 5 confirmation dialogs that occurred during this process. On to the next one, which I wouldn’t be able to find a copy of, and would have to search Pubmed for, whereupon I’d find more recent papers from that author, if I was searching by author, or other relevant papers from other authors, if I was searching by subject. Not wanting to cite outdated info, I’d click through from Pubmed to my school’s online catalog, re-enter the search details to find the article in my library’s system, browse through the until I found a link to the paper online, download the PDF and .ris file(if available), or actually get off my ass and go to the library to make a copy of the paper. As I was reading the new paper from the Dr. B, I’d find some interesting new assertion, follow that trail for a bit to see how good the evidence was, get distracted by a new idea relevant to an experiment I wanted to do, and emerge a couple hours later with an experiment partially planned and wanting to re-structure the outline for my introduction to incorporate the new perspective I had achieved. Of course, I’d want to check that I wouldn’t be raising the ire of a likely reviewer of the paper by not citing the person who first came up with the idea, so I’d have some background reading to do on a couple of likely reviewers. The whole process, from the endless clicking away of confirmation prompts to the fairly specific Pubmed searches which nonetheless pulled up thousands of results, many of which I wasn’t yet aware, made for extraordinarily slow going. It was XKCD’s wikipedia problem writ large. Continue reading

Twitter Weekly Updates for 2009-02-22

- If it’s just hosting you need, I’ve got way excess capacity in my dreamhost account. I’d be happy to set you up… re: http://ff.im/ZB7T #

- Twitter Updates for 2009-02-15 http://ff.im/14tNd #

- Nice summary! re: http://ff.im/14eMe #

- Wow. I understand wanting to avoid eye-strain and all that, but it is an electronic device after all. How hard… re: http://ff.im/12PKx #

- The main difference is that a quiche is made from egg custard, whereas a fritatta is usually just egg, without… re: http://ff.im/14kHk #

- Liked “Genetic Future : The 1000 Genomes Project is holding back genetics!” http://ff.im/14cTx #

- Will it take the media as long to realize they lost the speed battle as it did record co’s to realize they lost the digital content battle? #

Twitter Updates for 2009-02-15

- Twitter Updates for 2009-02-14 http://ff.im/13I0x #

- Liked “Econbrowser: Tribune reports that 28% San Diego County owe more on their mortgage than the house is worth;” http://ff.im/13IqJ #

- I’m only surprised the number isn’t higher. re: http://ff.im/13IqJ #

- Richard, doesn’t the WSJ logic, as you explain it, only work under zero-sum conditions? To get a smaller… re: http://ff.im/13EsR #

- @sdbn Digg for medicine has been tried: http://tinyurl.com/b7crd6 in reply to sdbn #

- That’s only sometimes, and xp had the same behavior. It is annoying. #

- @kejames I don’t know of a client that threads replies, but Troy’s Twitter Script for greasemonkey works if you click through from client in reply to kejames #

- RT @sdbn: Protip: you can quickly look up San Diego Biotech companies by typing the SDBN URL followed by the first letter: http://sdbn.org/c #

- RT @sciam: Now up: Steven Benner. LiveScience’s on his talk yesterday on 8-nucleotide (rather than 4) genetic code http://is.gd/jB6M #AAAS09 #

- RT @psiquo: Science news in crisis http://ff.im/-14ec9 (mrgunn:Throwing down the gauntlet for @sciam @alexismadrigal @BoraZ to get audience) #

Twitter Updates for 2009-02-14

- I hope you don’t have an EPOCH FAIL today! http://xkcd.com/376/ re: http://ff.im/12U0Y #

- Liked “It works. I put “resisting salty snack chips” into http://gopproblemsolver.com/ “The solution to your…” http://ff.im/12U5B in reply to jayrosen_nyu #

- Could this be the Science Social Networking killer app? http://ff.im/12Ud1 #

- @Barbarellaf If you use Tweetdeck, you can do the categorization yourself. in reply to Barbarellaf #

- @gregaustin1 You as well, Greg. in reply to gregaustin1 #

- @GregorMacdonald Shall we call them the Globe and Fail? in reply to GregorMacdonald #

Could this be the Science Social Networking killer app?

There are tons of social tools for scientists online, and the somewhat lukewarm adoption is a subject of occasional discussion on friendfeed. The general consensus is that the online social tools, in general, which have seen explosive growth are the ones that immediately add value to an existing collection. Some good examples of this are Flickr for pictures and Youtube for video. I think there’s an opportunity to similarly add value to scientists’ existing collections of papers, without requiring any work from them in tagging their collections or anything like that. The application I’m talking about is a curated discovery engine.

There are two basic ways to find information on the web – searches via search engines and content found via recommendation engines. Recommendation engines become increasingly important where the volume of information is high, and there are two basic types of these: human-curated and algorithmic. Last.fm is an example of a algorithmic recommendation system, where artists or tracks are recommended to you based on correlations in “people who like the same things as you also like this” data. Pandora.com is an example of the other kind of recommendation system, where human experts have scored artists and tracks according to various components and this data feeds an algorithm which recommends tracks which score similarly. Having used both, I find Pandora to do a much better job with recommendations. The reason it does a better job is that it’s useful immediately. You can give it one song, and it will immediately use what’s known about that song to queue up similar songs, based on the back-end score of the song by experts. Even the most technology-averse person can type a song in the box and get good music played back to them, with no need to install anything.

Since the reason for the variable degree of success of online social tools for scientists is largely attributed to the lack of participation, I think a great way to pull in participation by scientists would be to offer that kind of value up-front. You give it a paper or set of papers, and it tells you the ones you need to read next, or perhaps the ones you’ve missed. My crazy idea was that a recommendation system for the scientific literature, using expert-scored literature to find relevant related papers, could do for papers what Flickr has done for photos. It would also be exactly the kind of thing one could do without necessarily having to hire a stable of employees. Just look at what Euan did with PLoS comments and results.

Science social bookmarking services such as Mendeley, or perhaps search engines such as NextBio, are perfectly positioned to do something like this for papers, and I think it would truly be the killer app in this space.

Claiming my blog on the new Nature Blogs.

Great work, guys! More on Friendfeed’s Life Scientists room.