How AI can be used for bioterrorism

Humanity has climbed to the top of the food chain, but as the pandemic demonstrated, it’s a precarious perch. It’s not just directly pathogenic viruses that we need to worry about, either. Small molecules and proteins synthesized by bacteria also pose a risk to life, to crops and livestock, and to the environment. These risks would be realized by a terrorist through genetic engineering, the means by which new capabilities are introduced to an organism through changes in its DNA. This is a time-consuming and laborious process that can be vastly accelerated with AI and biological design tools. A terrorist might design a novel virus that causes human illness, one which kills livestock, makes soil infertile, or fouls the ocean.

How dangerous are current AI systems?

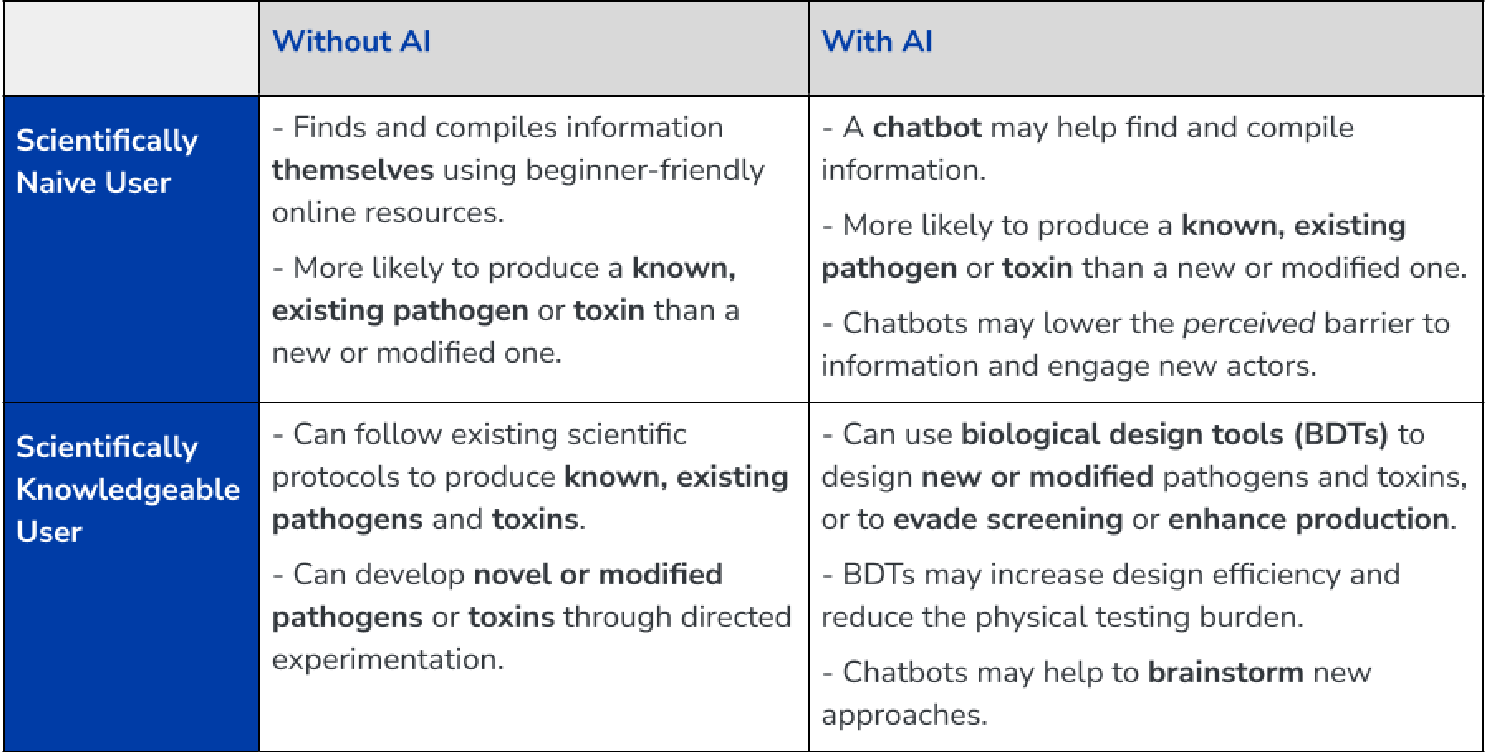

In 2023, RAND gave researchers a task to create a plan for designing and delivering a bioweapon. The teams which used an LLM weren’t significantly empowered in doing so relative to those which just used the internet, though one team with a jailbreaking expert did better than the other teams. This study was underpowered and, importantly, only tested commercial LLMs, not models where the model weights are available and thus amenable to fine-tuning

OpenAI did a similar study, finding that LLMs slightly increase capabilities across all phases of development of a pathogen. Those with access to a version of GPT4 with safety features removed developed better plans, indicating an actual terrorist group could use open weight models to gain a similar benefit. While open weight models struggle relative to GPT4 due to lack of access to technical and medical information, these models are improving rapidly.

CSET summarizes the current risks and highlights that threat actors which might have previously focused on conventional weapons might now perceive a lower barrier to adoption of bioterrorism.

How quickly might capabilities improve?

AI Capabilities

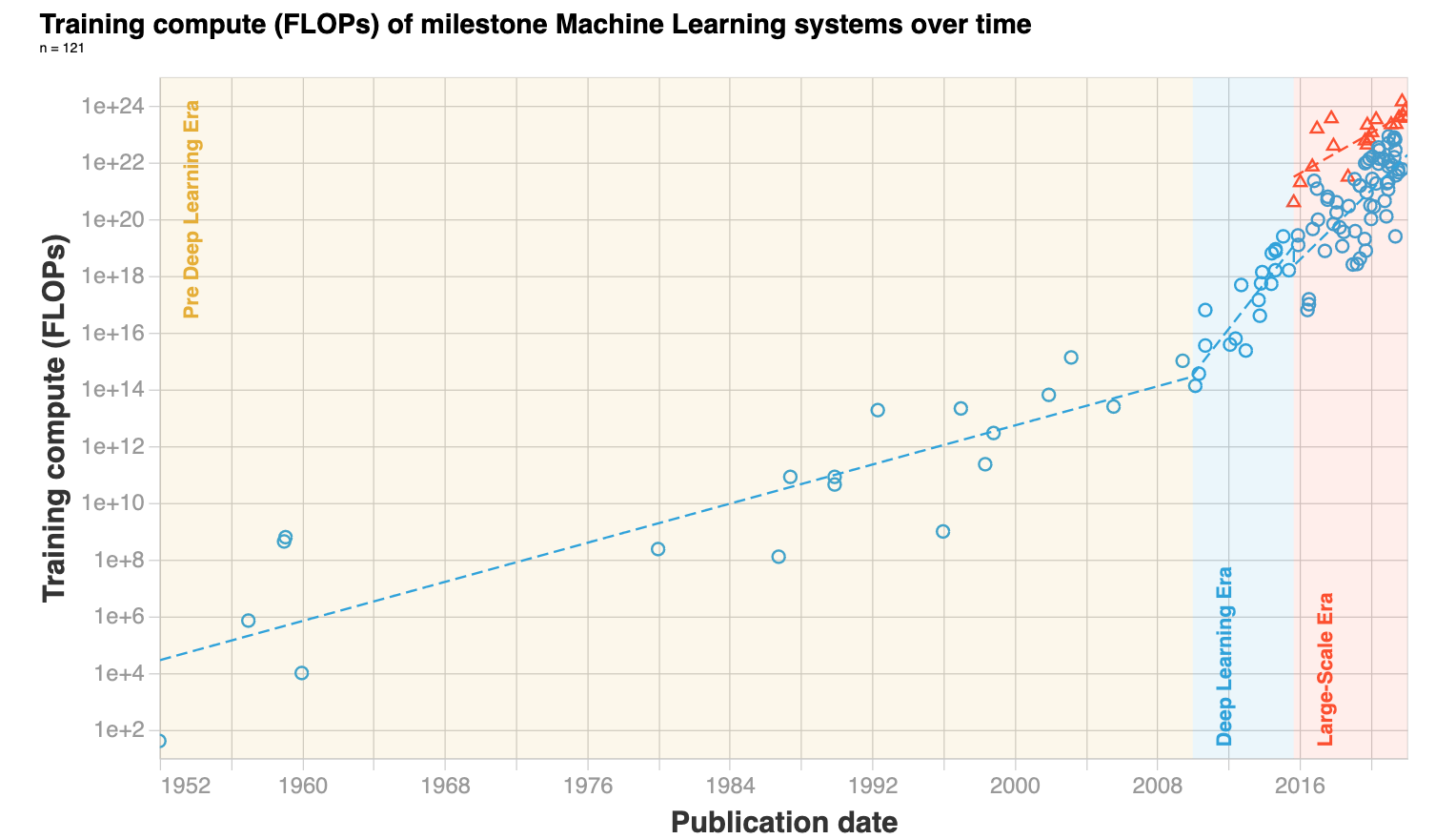

In 2019, GPT2 couldn’t reliably count to 10. Now they can work autonomously to design experiments and analyze data. The amount of compute used to train systems has dramatically increased and is currently doubling about every 9 months, with room to continue for some time. AI firms might run out of high-quality training data next year, slowing increase of capabilities, but synthetic data is being used to replace existing data with some success. Systems that can autonomously design novel chemical agents currently exist, with capabilities mostly limited by the capabilities of the model. Algorithms are also improving faster than Moore’s law, enhancing model capability at a given level of compute.

Biotech Capabilities

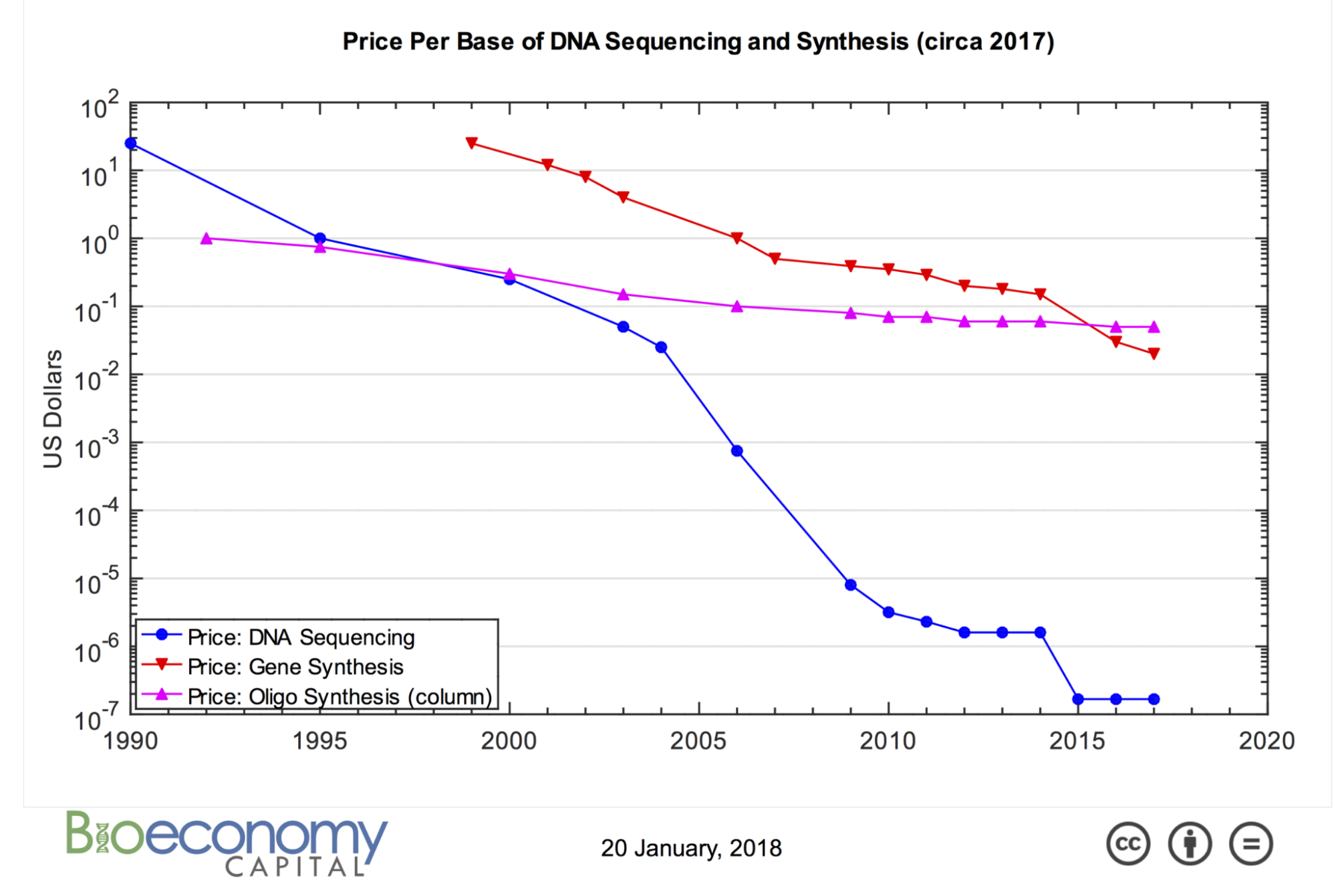

AI models aren’t the only area improving exponentially. The cost of gene synthesis has dropped several orders of magnitude over the past few decades and it’s estimated that it would cost about $1000 to synthesize the 1918 influenza virus, to which there’s little existing immunity.

Taken together, the increased capabilities of AI models and increased access to information, the decrease in cost of synthesis and technical expertise required, indicates a high likelihood of a bioterrorism incident occurring within 5 years. A rough estimate of how long it will be before an AI-assisted bioterrorism incident would consider the following:

- 30,000 PhDs with the knowledge to assemble a virus today

- human-level machine intelligence currently estimated to arrive within 37 years, but note this estimate has become 8 years closer than it was just 6 years ago.

- 0.02% of attacks are bioterrorism, rising due to perceived lower barriers to entry

- 7000 terrorist attacks per year

This suggests several attempts could be made each year, with the likelihood of success increasing each year, at a cost within the budget of nearly any organized group.

What we should do now to reduce misuse risk of AI for bioterrorism

- Standardize screening for biorisk across nucleic acid synthesis companies, Biological design tools, and cloud labs. The Administration for Strategic Preparedness and Response’s (ASPR) Screening Framework Guidance provides a good starting point.

- Standardize evaluation for biorisk across frontier model providers. OpenAI and Anthropic have presented reasonable plans in this area and we look to MLCommons and the AI Safety Institute Consortium to provide a forum for best practice to be established. The Center for Long-term Risk estimated establishing a sandbox for conducting ongoing evaluations of AI-enabled biological tools will require investment of $10 million annually.

- Funders and publishers of academic research should require a statement about capabilities of models developed that show dual-use behavior, similar to the ethics statements required for research involving people or animals. Institutional Biosafety Committees are a good framework for this.

Harm-reduction recommendations not specific to AI

- Establish global coordination for efforts to detect pandemic-capable pathogens. Routinely sequencing air and water in travel hubs and airplanes could detect an increase in pathogens before people start to show symptoms.

- Harden society against the inevitable next pandemic

- Research on resilient crops such as those which could be grown indoors or those resistant to heat. Synthesizing protein from fermentation of natural gas could be done for $3-5/kg, but to have enough capacity to support the global population would take several years, assuming $500m capex, 2-4 years, and diversion of existing capacity of companies such as Calysta Inc., Unibio A/S, Circe Biotechnologie GmbH, String Bio Pvt Ltd, and Solar Foods.

- Accelerate vaccine development. A framework for accelerating development in times of crisis could shorten the time-frame to recovery. The Center for Population-Level Bioethics may be a good partner for this work.

- Install air sanitation devices in public transportation, airlines, and businesses.

- 200nm UV eliminates 90% of pathogens after about a minute of exposure. At these levels it’s safe, businesses would likely install units to save on lost productivity, and it doesn’t require anything from individual employees, as with mask-wearing.

- HEPA filtration has similar benefits, reduces airborne COVID particles by 65%, and could tie into genetic surveillance activities.

- Develop and stockpile effective PPE

- Establish a liability regime for gain-of-function research and for public release of model weights. The risk of liability would provide an incentive for organizations to manage externalities associated with their product development and research.